Generative AI’s rising threat to user-generated content platforms

June 23, 2023 | UGCIn the heart of our digital era, the line between reality and artifice is becoming increasingly blurred. The rise of generative artificial intelligence – a breed of AI capable of concocting anything from text and images to alarmingly convincing human voices – has thrust us into a new dimension of ambiguity. Where once the internet was our portal to the world, a conduit of information and a playground for creativity, it is now morphing into a landscape fraught with uncertainty and deception. The democratization of content creation, once celebrated as a cornerstone of the digital revolution, has encountered a vexing complication in generative AI, a challenge which, paradoxically, is also a child of the same revolution.

Platforms for user-generated content (UGC) stand at the epicenter of this new frontier. These sites, designed to empower individual expression and foster community, are now finding themselves at a crossroads of innovation and deceit, grappling with threats they’ve never faced before. From the dissemination of deepfakes and offensive images to thorny issues around copyright infringement, the challenges are numerous.

The authenticity of content, the bedrock of any UGC platform, is under threat. Deepfakes, a sinister application of generative AI, have begun to infiltrate our digital spaces, sowing falsehoods and confusion. Beyond the glaring risk of misinformation and deception, there are subtler, perhaps more corrosive implications – trust erosion. For users, who have long held faith in the relative truth of the digital world, the advent of indistinguishable counterfeits threatens to shake that confidence.

At the same time, the potential for copyright infringement looms large. Generative AI, trained on vast pools of data, is able to reproduce content mirroring the style of specific artists or creators. The resultant works, despite their undeniable innovation, draw into question issues of consent and rightful credit, upending the traditional definitions of intellectual property.

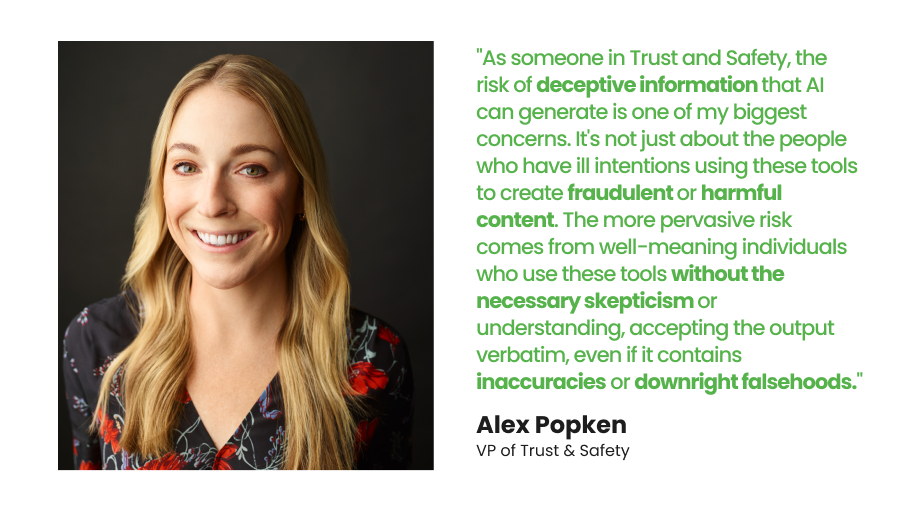

Navigating this storm is no easy task, and it is a responsibility that keeps many content moderators up at night. Charged with the responsibility of maintaining order amidst the growing chaos, content moderation teams are facing a Goliath in generative AI capability that enables bad actors to create sophisticated, toxic content at scale. We spoke to Alex Popken, WebPurify’s Vice President of Trust & Safety, to get her insights on the seismic shifts in the landscape of UGC platforms and the path forward.

“As someone in Trust and Safety, the risk of deceptive information that AI can generate is one of my biggest concerns,” she says. “It’s not just about the people who have ill intentions using these tools to create fraudulent or harmful content. The more pervasive risk comes from well-meaning individuals who use these tools without the necessary skepticism or understanding, accepting the output verbatim, even if it contains inaccuracies or downright falsehoods. That keeps me up at night.”

As a result of this, generative AI has a profound effect on the perceived trustworthiness of UGC platforms, introducing elements of ambiguity and uncertainty to the user experience.

“An example of this could be really convincing scams in the online dating space: someone could leverage generative AI to create a really persuasive fake profile and identity,” Alex points out. “Today, many scammers rely upon stock photos that are easy to reverse image search, or their in-app messages are riddled with grammatical issues and fairly easy to spot. Imagine a world in which scammers are able to leverage AI to become that much more untraceable.”

When scammers can exploit AI to fabricate persuasive identities, it erodes the authenticity of user interactions.

Parallel to these concerns lies a sprawling debate over the copyright implications of generative AI. A new generation of artists might wield AI as an instrument for creative innovation, but this is a reality teetering on the edge of legal quagmire. With generative AI models trained on extensive datasets, the resulting art or music could tread uncomfortably close to the line of intellectual property infringement.

“GenAI models have been trained on millions of pieces of data,” Alex points out. “You could ask it to generate fine art in the style of a particular artist, yet that artist has not consented nor receives credit or profit. The same challenges apply to music and creating songs in the vocal likeness of an artist. It’s a slippery slope.”

The answer to these challenges cannot be distilled into a single catch-all solution. Tech firms must bear the responsibility for the moderation of their generative AI technology, employing a blend of machine learning algorithms and human judgment to manage content. Yet, the path is rife with complexities, including the treacherous terrains of disinformation, algorithmic bias and the intricacies of intellectual property rights.

“I don’t think brands have a lot of control over whether or not their users bring AI-generated content to their platforms – AIGC is becoming ubiquitous,” Alex says. “The best they can do is ensure that they have robust content moderation protections in place to remove harmful content that is user- or AI-generated. Companies should also ensure that their community guidelines include AI abuse vectors, such as harmful synthetic or manipulated media. As an example, TikTok recently updated its guidelines to account for AI misinformation and it’s requiring users to disclose when media has been altered by AI.”

Deepfakes, potent tools for digital impersonation, emerge as another formidable challenge for users and platforms alike. The road to a reliable detection system remains elusive, requiring further developments in AI ethics and regulatory oversight. In a world where fact and fiction are increasingly indistinguishable, this cannot be overstated.

Generative AI also opens up new avenues for fraud and deception. Picture fraudsters creating synthetic IDs to bypass verification checks, or even employing voice cloning to stage elaborate scams. These chilling scenarios paint a picture of the plausible threats looming on the horizon, and in some well-publicized cases have already transpired.

Yet, AI isn’t just an agent of chaos; to the same extent it remixes and creates it also has the potential to repeat by mirroring and perpetuating our biases. The widespread prevalence of AI-generated content introduces the risk of reinforcing harmful stereotypes, demanding rigorous oversight and constant efforts to identify and eliminate such biases from AI models.

“The challenge with these models is that the data they’re trained on can be biased – it can, for example, perpetuate tropes based upon race, gender or religion,” Alex says. “We’ve also seen instances in which facial recognition systems have been biased toward specific racial and ethnic groups. It’s very important that companies building this technology prioritize research and roles dedicated to responsible AI and ensuring these biases are detected and removed. There should also be transparency around data used to train AI, requiring companies to disclose this information.”

As AI-generated content weaves itself further into the fabric of our digital interactions, user trust and experience will inevitably be affected. Brands will be tasked with maintaining transparency and updating their community guidelines to account for AI-generated content. Collaborative dialogues are already taking place between generative AI companies and governments, though the speed and appropriateness of regulatory measures remain a matter of speculation in countries like the United States.

“The companies developing generative AI have a responsibility to ensure that their technology is appropriately moderated,” Alex says. “Many of the major players conduct red team exercises whereby they have trust and safety analysts purposely try to expose gaps so they know what they need to plug. Many of these companies also have guardrails in place that prevent users from, say, asking genAI how to make a homemade bomb – but there are still gaps and we’ve seen these widely publicized.”

On a hopeful note, Alex says that AI also presents numerous opportunities for positive transformation, in sectors like healthcare and also in making trust and safety teams more effective. From providing assistance in accurate medical diagnosis to helping trust and safety teams better root out problematic content and conduct at scale, there are also plenty of beneficial applications of AI to be excited about.

But, trust and safety teams around the world are already battling the dark side of this technology, laying the foundation for a more secure digital future. According to Alex, the interplay between generative AI and UGC platforms is bound to intensify, bringing with it a bevy of novel challenges that will test our collective adaptability.

“In terms of moderation, it’s still really a combination of machines and people – people to write the rules, govern, and help expose weaknesses, and AI to layer on top and moderate input and output,” Alex says. “I think this is a massive opportunity in the technology sector and something that we are thinking about at WebPurify. A key component of this will be AI ethics and regulation and the extent to which AI companies are required to disclose what has been AI-generated.

“What will continue to be a significant challenge is combatting complex areas like disinformation – AI isn’t great at this, yet.”

As we move further into this uncharted territory, the key takeaway is clear: AI technology is a double-edged sword, capable of both creating and mitigating risks. Our ability to navigate this labyrinth of challenges will define the future of our digital lives, shaping how we interact, share and consume content in the virtual world. As content moderators, we find ourselves in the midst of a grand game of cat and mouse, where the stakes are high, and the outcome will define our collective future in the digital age.

Further Reading

AI art poses the biggest challenge for content moderators: this is why

What is AI art and what does it mean for the future of content moderation?